My main tech project in the last few month has been an all-in-one home lab. The lab is a high-end PC running a hypervisor, which hosts VMs and provides a flexible infrastructure for experiments. One of the main components of such a setup is a storage VM that provides storage to other VMs, while giving us benefits of storage aggregation and management. The VM manages disks and exposes them as storage over the network. As a result such a service is called Network Attached Storage.

The disks can be organized into usable storage units in many ways. Nowadays a popular format (file system) to organize storage is called ZFS. There are many advantages and disadvantages to ZFS, which I won’t get into as there are plenty of resources online. One thing that is revolutionary, and that I found exciting, is that the files system and the RAID are one, allowing for the layers to affect each other, which in turn enables interesting properties.

There are many options if one wants to deploy a Network Attached Storage (NAS) solution that supports ZFS. I have decided to test the following three most popular, free systems.

- NAS4Free 9.3

- FreeNAS 9.3

- NexentaStor 4.0.4 – community edition

There are many differences between the systems. NAS4Free and FreeNAS have a common history and are both based on FreeBSD, which is a unix-like OS. NexentaStor on the other hand is based on Illumos/OpenSolaris, which is another unix-like OS.

I found myself spending much more time on the aspect of storage then I hoped. What can I say, storage is a discipline many spend their entire life learning and practicing.

Interoperability

One of the features of ZFS is the ability to move the data disks to another machine if needed. This is something with practical value as it often happens in the field that servers fail or need to be upgraded to newer hardware. The idea that you can shutdown a system, take the disks out, plug them in another system and import is impressive. ZFS is even expected to scan the disks, identify its devices and put the pieces together automatically. However, as I have learned working with Web technologies for many years, often there is a gap between the specifications and the implementation. Let’s find out how the different NAS systems are able to import storage from other systems.

Setup

Each NAS system was installed in a VM of the all-in-one server running WMware ESXi 6.0. Each VM got 8GB of RAM and 2 cores. A LSI disk controller was passed through to the VMs. Each time only one VM was running with exclusive access to the controller and the attached disks. The disks were a mix of 3TB HDD and 256GB SSD.

One system at a time, ZFS storage pools were created on each system, exported and attempts were made to import the pools onto other systems. My first choice was always to accomplish the flow using the system’s web UI. Sometime it was needed to go to a lower level as will be shown in a moment.

Expectations

Versioning

Preferably any system would be engineered in such a way that it could be expanded in the future. For this purpose, ZFS implements a versioning system. This allows new features to be introduced so that ZFS can stay state-of-the-art. Unfortunately, not all new features are backward compatible, and as a result it is expected that newer versions of the format might not be supported for use on older systems.

Up to a certain point ZFS versioning was based on a version number. Today’s implementation has switched to a “feature”-based versioning where the version is a “bit mask” or a combination of different flags. The status of features of a certain pool can be inspected with the following command (and a snippet of the output)

# zpool get all [pool] | grep feature@ ... [pool] feature@hole_birth active local [pool] feature@extensible_dataset enabled local [pool] feature@bookmarks enabled local [pool] feature@filesystem_limits enabled local [pool] feature@meta_devices disabled local ...

A feature can have 3 states.

- disabled – feature is supported but the pool is configured not to use this feature

- enabled – feature is supported and allowed to be used, but the data of the pool has yet to be affected by the feature

- active – the data of the pool has been affected by this feature and is invalid for a system that is not aware of this feature

An enabled feature can become activated if it detects that the need for it arises, when certain data would benefit from a specific encoding format or compression. Certain features never activate as they only affect the read process, while other features always activate as the trigger for their activation is always present.

Results

Versioning

The three systems investigated do not have the same set of features. As a result not all migrations are supported.

Feature

NAS4Free

FreeNAS

NexentaStor

async_destroy

V

V

V

empty_bpobj

V

V

V

lz4_compress

V

V

V

multi_vdev_crash_dump

V

V

V

spacemap_histogram

V

V

V

enabled_txg

V

V

V

hole_birth

V

V

V

extensible_dataset

V

V

V

bookmarks

V

V

V

filesystem_limits

V

V

V

embedded_data

V

large_blocks

V

meta_devices

V

vdev_properties

V

class_of_storage

V

checksum_sha1crc32

V

It is worth mentioning that most system enable all the features they support by default though it is not mandatory.

The interesting features are

- embedded_data – Blocks which compress very well use even less space.

- large_blocks – Support for blocks larger than 128KB.

- meta_devices (read-only compatible) – Dedicated devices for metadata.

- vdev_properties (read-only compatible) – Vdev-specific properties.

- class_of_storage (read-only compatible) – Properties for groups of vdevs.

- checksum_sha1crc32 – Support for sha1crc32 checksum.

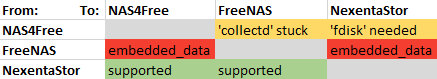

Two of the features are only supported in FreeNAS and four are only supported in NexentaStor. NAS4Free uses a subset of features from other systems and has no “unique” ones. Fortunately in my case, the four features unique to NexentaStor were “enabled” but not “active” and as a result I was able to import the pool into other systems. Unfortunately the “embedded_data” feature used by FreeNAS auto-activates thus preventing me from importing FreeNAS upgraded pools into other systems.

Quirks

Interestingly enough, some migration paths were difficult and didn’t work out of the box.

Importing in FreeNAS

I had difficulties importing the pools through the UI of FreeNAS. The pools would import just fine in the shell, which indicated they are valid ZFS-wise, but the import would be stuck via the UI. Not importing via the UI would leave FreeNAS software unaware of the pools affecting functionality and not mounting after a reboot.

tail -f /var/log/debug.log

showed that FreeNAS is stuck waiting for ‘collectd‘ service to restart.

Executing: /usr/sbin/service collectd restart

fortunately this is easily resolved by killing the service

pkill -9 collectd

at which point the process continues.

This issue might have been just specific to my setup. Other members of the community were not able to provide an explanation.

Importing large disks from NAS4Free to NexentaStor

Trying to import a single 3TB disk pool from NAS4Free in NexentaSotr resulted with the following error message:

root@nexenta:/volumes# zpool import pool: tank1 id: 17717822833491017053 state: UNAVAIL status: One or more devices are missing from the system. action: The pool cannot be imported. Attach the missing devices and try again. see: http://illumos.org/msg/ZFS-8000-3C config: tank1 UNAVAIL insufficient replicas c2t50014EE2B5B23B15d0p0 UNAVAIL cannot open

Though it seems fatal, the data is valid and imports well on other systems. Further investigation shows that ZFS can’t read all labels from the disk:

root@nexenta:/dev/rdsk# zdb -l c2t50014EE2B5B23B15d0p0 -------------------------------------------- LABEL 0 -------------------------------------------- version: 5000 name: 'tank1' state: 1 txg: 892 pool_guid: 17717822833491017053 hostid: 1747604604 hostname: 'nas4free.local' top_guid: 10241644842269293026 guid: 10241644842269293026 vdev_children: 1 vdev_tree: type: 'disk' id: 0 guid: 10241644842269293026 path: '/dev/da4.nop' phys_path: '/dev/da4.nop' whole_disk: 1 metaslab_array: 34 metaslab_shift: 34 ashift: 12 asize: 3000588042240 is_log: 0 create_txg: 4 features_for_read: com.delphix:hole_birth -------------------------------------------- LABEL 1 -------------------------------------------- [redacted, like label 0] -------------------------------------------- LABEL 2 -------------------------------------------- failed to read label 2 -------------------------------------------- LABEL 3 -------------------------------------------- failed to read label 3

Each disk device that is used by ZFS has four 256KB areas with meta data. Two of the areas are in the beginning of the disk and two are in the end (256KB aligned AFAICT). Not sure why the system “failed to read” the labels as a read with “dd” showed the data in the right places. I was not able to identify the cause of this. It is suggested that this is just a difference between FreeBSD and Open Solaris. I did however stumble on a workaround. Apparently using fdisk to create an MBR on the disk caused OpenSolaris ZFS to identify the labels on the device. The MBR doesn’t overwrite any ZFS data because ZFS skips and leaves black the first 8K of the disk.

Who to blame? On the one hand, NAS4Free uses whole disks for ZFS devices and not GPT partitions (as recommended). On the other hand, NexentaStor clearly can’t read the valid labels at the end of the disk unless the disk has some kind of partitioning…

Matrix

We end up with the following migration matrix

There are some quirks vs the expected version differences, but overall workarounds exist for any scenarios that should be supported.

I wonder if there is a way to cause FreeNAS not to enable ’embedded_data’ in new volumes, thus allowing for them to be imported onto other systems. Otherwise there is a significant “lock-in” involved and one would need to resort to creating a second storage pool or a backup in order to migrate through copying.

Let me know in the comments if this helped you or if you encountered similar scenarios. Happy ZFSing.

Pingback: Understanding And Improving VMware Snapshot Integration In FreeNAS | Arik Yavilevich's Blog

What’s about now that NAS4Free 10.2 supports embedded_data and large_blocks?

Hi Davide, that is an interesting question. It is likely that with support for embedded_data and large_blocks you could import FreeNAS volumes to NAS4Free 10.2 . However, as was shown in the article, there are other issues except features that could affect compatibility. My first concern would be with regards to whether partitioning is used and if so, how. As always in these cases, it is best to make an experiment in a test environment. Unfortunately, I no longer have the setup I used for the experiment available. If anybody decides to investigate this I am happy to link to updated results.