Introduction

There are 4 parameters that are important for any storage setup. They are, in no particular order:

- Performance – speed of reading and writing data at various usage patterns [measured in bytes/sec or operations/sec for each pattern]

- Capacity – how much space you can use [measured in bytes]

- Cost – $ per GB [measured in money/bytes]

- Redundancy – how many disks and which disks you can lose without losing data [measured by min and max disks to cause data loss]

Of course, some of these parameters, specifically redundancy, are more important for a corporate storage solution than for a home setup.

This time I will focus on Performance. You don’t control this parameter directly. You put certain drives and hardware in and you might have a general idea of what will happen, but “actual results may vary”. Therefore, there is need to measure this property. This article will cover best practices of measuring performance, suggested tools and performance examples.

Two words about the other parameters: since I am not going to discuss RAIDs in this article (will be covered separately in the future), I will be assuming simple single disk setups. For a single disk setup capacity, cost and redundancy are trivial. The capacity is written on the disk, the cost is what you bought it for and redundancy is non-existent. Consequently, for a single disk volume, performance is the only non-trivial parameter. Let’s measure performance!

How to measure IO performance?

As the title suggests, performance is measured, not calculated. This is not because it is impossible to calculate, but because it is extremely difficult to calculate. The challenge in calculating performance is that you need to model all the components on the way – the disk, the channel, the controller, caches, the OS, etc. It is much easier to just build the setup and measure it.

Tools

To take measurements, you should use an IO measuring program. Such a program will, at least, output the metrics and, most often, it will also generate the usage patterns. There are 3 tools you should consider for this purpose in the Windows eco-system:

- Performance monitor – is a generic, built-in monitors system, also featuring a rich collection of “Physical disk” metrics. If you are going to generate your own load, you would want to use this. A basic UI for this system is now featured directly in the Windows 7/8 “Resource manager”.

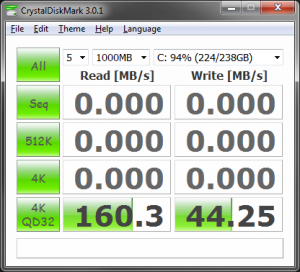

- CrystalDiskMark – is an open-source program that I prefer for 95% of the uses. It generates the main usage patterns and measures them.

- Iometer (latest version) – is an open-source program that has been around for a long time and is very feature-rich. As in many cases, feature-rich also means complex. The main advantage of Iometer over simpler tools is the ability to simulate many custom and composite usage patterns.

Common usage patterns

As there are infinite sequences of possible read and write operations, a set of basic usage patterns became “popular”. It is critical to have common patterns across the board, as comparing metrics of different patterns is like comparing apples to oranges. The common basic usage patterns are:

- Sequential read of large amounts (~512KB)

- Sequential write of large amounts (~512KB)

- Random read of small blocks (512B to 4KB), one read at a time (queue depth=1)

- Random write of small blocks (512B to 4KB), one write at a time (queue depth=1)

- Random read of small blocks (512B to 4KB), several reads in parallel (queue depth>=8)

- Random write of small blocks (512B to 4KB), several writes in parallel (queue depth>=8)

The first pattern pair is to simulate reading and writing of large files, the second one is to simulate random access without a queue and the third to simulate random access with a queue. Another common case that is often used but not listed is random reading and writing of large blocks.

The unit of measure for these metrics is either bytes/sec (usually MB/s) or operations per second (called IOPS). The duality is due to two different bottle necks of IO devices: throughput and processing logic. IOPS will be the popular metric for tests that stress the processing logic (lots of small operations) and MB/s will be the metric for tests that stress the throughput (large operations). Imagine your IO system as a fleet of trucks carrying gravel from place A to B. Sending large amount of gravel will stress the amount of trucks and their capacities, whereas sending lots of small buckets of gravel will stress the ability to coordinate the fleet and do a lot of round trips.

Having metrics for the “popular” patterns will allow you to compare various disks and various computers in an “apples vs apples” fashion. Furthermore, given the metrics of the “basic” patterns, you can get a good feel of what the metrics would be for composite patterns. Some sites will also define “common” composite usage patterns, such as “a workstation”, “a file server”, “a web server”, “a database server”, etc. and test those.

Analysis

Now that you have a list of measurement programs and you have an understanding of the basic usage pattern, you can start testing and comparing different systems and disks.

Ok, so besides getting hard numbers for the performance metrics, what kind of insights can we discover from measuring IO usage patterns?

- Comparing sequential vs random access can provide information about seek time of the device.

- Data of sequential metrics can provide information about devices max throughput.

- Data of random metrics can provide information about devices ability to process instructions.

- Comparing random read access with and without a queue can provide information about devices ability to optimize execution order.

- Comparing random read and write speeds can provide information about devices write cache capabilities.

Benchmarking rules

You should pay attention to these factors when benchmarking:

- Make sure to test when there are no other programs using the disk. It is best to test when a disk is empty but it is not always possible. Note that closing all the windows doesn’t guarantee that no other programs are using the disk. Use monitoring tools to see that there is no disk activity before you start the test.

- Does your program of choice use a file on the disk or the whole disk to test? Testing on a file can be less accurate. The file might become allocated at the start of the disk or it can be allocated at the end. This will affect the metrics. Also, if the file is fragmented, it might affect the result. Iometer has an impressive ability to benchmark an unformatted disk.

- Is your program of choice able to bypass the OS cache? It is common for today’s systems to have 8GB and more of RAM. If your test file is not large enough, it might become cached by the OS providing high performance results, which does not really represent the IO device’s performance. It is advisable to use a software that can control that.

- Disks and Disk controllers have caching, this is wonderful for performance and you need to be aware of how it is configured to parse the results correctly.

Sharing and comparing

Some sites will do reviews and post their results online. This is, of course, true for all kinds of benchmarks, not necessary for IO.

The two leading sites in this space are

Performance examples

| Disk | Seq R MB/s | Seq W MB/s | Rand R MB/s | Rand W MB/s | Rand R QD MB/s | Rand W QD MB/s |

|---|---|---|---|---|---|---|

| Seagate Bar. 250GB ~5 y.o. * | 86 | 89 | 0.3 | 0.7 | 0.5 | 0.7 |

| WDC Blue 320GB ~3 y.o. @ | 57 | 79 | 0.3 | 1.4 | 0.3 | 1.4 |

| WDC Blue 1TB New * | 182 | 179 | 0.7 | 2.0 | 0.7 | 2.0 |

| WDC Green 2TB Gen1 * | 74 | 64 | 0.3 | 0.2 | 0.4 | 0.2 |

| WDC Green 2TB Gen2 * | 112 | 109 | 0.3 | 0.9 | 0.4 | 0.9 |

| Seagate RAID1 500GB | 74 | 67 | 0.4 | 0.9 | 1.6 | 0.9 |

| Transend SSD720 256GB # | 242 | 115 | 15.9 | 10.5 | 121.8 | 44.3 |

| Crucial m4 64GB mSATA * | 386 | 93 | 17.7 | 6.5 | 22.0 | 6.8 |

| Crucial m4 64GB mSATA AHCI | 491 | 110 | 19.0 | 6.7 | 95.5 | 7.6 |

@ The disk is connected via a PATA channel.

* The following disks are connected in “IDE” mode (as opposed to “AHCI” mode) due to the capabilities of the machine, in which they are installed, or for the sake of benchmarking. “IDE” mode is a backward compatible SATA mode, which is limited. The main disadvantage being the lack of NCQ.

# Disk is on a SATA/300 channel (as opposed to SATA/600) and rate is limited to theoretical 300MB/s.

Analysis example

- As you probably already know, SSDs deliver higher performance than mechanical disks, although cost and capacity are still strong points for mechanical disks.

- WDC Blue delivers better performance than WDC Green. This is by design, as Green is a more quiet and energy efficient series.

- Most drives (note these are mainly for home use) don’t have sophisticated enough controllers/logic and NCQ to scale with queue depth. The Transend SSD720, with its LSI SandForce controller really stands out in this regard. The mechanical Seagate disks also show scaling at queue depth (especially in a RAID).

- The dramatic effect of enabling AHCI can be seen with the benchmark of the Crucial m4 disk.

- Most mechanical disks in the test would run at higher performance if connected to an AHCI interface. In this case it would require upgrading to a different controller or motherboard.

- Most mechanical disks measured perform better random writes than random reads. I am unable to say for sure why that is but I assume this is due to write-through caching.

- The SSDs measured perform better reads than writes. As far as I know, this is due to the MLC nature of both SSD disks in the test.

Conclusion

Measuring performance is not as complex as it seems, and regardless, it is critical. Since performance is easier to measure than to calculate, it is critical to do so and often. Only by measuring many different configurations will you be able to compare and analyze. This is very much a scientific process, where you collect observations, form a theory, conduct tests and discover findings.

What’s next? Well, two things come to mind. First, such data will give you food for thought about the best architecture for your project. You will have to decide about tradeoffs with the other 3 parameters of storage. Secondly, one can move beyond a single disk into combining various disks in various logical structures and measure how that affects performance.

Pingback: Is Adaptec’s latest 7.4.0 (build 30862) firmware bugged? | Arik Yavilevich's blog

Pingback: IO performance benchmark of unsupported ESXi configurations | Arik Yavilevich's blog

Pingback: DiskSpdAuto: Automating IO performance tests and result collection using DiskSpd and PowerShell | Arik Yavilevich's blog