Those who followed my previous posts know that I am using a storage server called FreeNAS in a VMware virtualization environment. There are several benefits of why one would use a storage server rather than just a trivial storage solution. Two of such major benefits are snapshots and replication.

In this article I will discuss the challenges of doing ZFS snapshots on a FreeNAS server, which is used for storing VMware VMs, how the existing FreeNAS-VMware integration is implemented, what the limitations of the current implementation are and will propose changes to improve the integration.

By following through you will be able to understand the challenges, the existing setup and whether you are affected. If you are affected you could install a patch to resolve any issues until the fixes are incorporated in the official source code.

Basics

A snapshot is a read-only copy of a storage volume at the time the snapshot was generated. Though it acts as a copy of the volume, effectively the snapshot shares data with the live volume, which results in low additional storage requirements. Any changes to the live volume will not overwrite the original data, instead they will exist side-by-side until the snapshot is deleted. Since snapshots are not copies of actual data and since only small amount of meta data is written during creation, snapshots are very quick. Snapshots are great tools in backup and recovery and essentially allow you to go back in time to recover files or undo changes.

As discussed in a previous article, storage (as most technical systems) comes in layers and different types of snapshots can be made at different layers. In this article I will deal with two types of snapshots: snapshots at the storage server layer (ZFS snapshots) and snapshots at the virtualization layer (VM snapshots). ZFS snapshots are snapshots of a volume, which may contain one or more disks of Virtual Machines (VMs) as well as other files. VM snapshots are snapshots that VMware creates of a disk or disks of a VM.

Replication is a process in which a storage volume’s data is synchronized to a different volume. The target volume can be on another machine and in another location, making replication a critical mechanism to achieve high availability and to implement backup and restore procedures. Some systems allow for online replication, where data of a live volume is replicated in real-time (and synchronously) to another volume. ZFS only allows for asynchronous replication and requires that the source is read-only during the transfer. Since it is not practical to put most live volumes in read-only mode for the purpose of replication, snapshots are used often as a way to “freeze” a volume so its data can be replicated.

Implementing snapshots in our storage system will provide us with the benefits mentioned above as well as enable us to replicate our volumes. Let’s discuss snapshots in more detail in the context of FreeNAS/VMware setup. I will not discuss replication in more detail in this article. You might want to read this easy to follow tutorial on snapshots and replication for more in depth knowledge.

Challenges of Using Snapshots with Virtualization

A key concern when making snapshots of live VM’s data is “consistency“. Consistency refers to the ability to correctly go back to the saved state that was captured by the snapshot. The reason you might find yourself unable to go back to the correct state might be if a snapshot is taken at a point in time when part of a critical change has yet to be written to disk and is still in memory (or have not been received/calculated yet). Thus only half of a critical change is captured in the snapshot resulting an invalid, corrupted state. This could be at the file system level or at an application level (such as the case with a database). Many systems are not sensitive to this, but many are, especially performance sensitive systems that keep data in memory. Restoring from such a snapshot is similar to booting a machine after it has power-cycled or crashed. Most of the times it will boot fine and recover, but a professional has to be ready for a scenario when the machine is not booting or the database is not going online correctly. Because of the similarity to crashing, such snapshots are called crash-consistent snapshots. Effectively, any ZFS snapshot you make of a volume containing VMs will be a crash-consistent snapshot unless you take certain measures.

Several methods are available in order to make a “more” consistent snapshot of a VM. Besides the trivial “shutdown”, “hybernate” or “suspend”, which disrupt the operation of the machine, we also have techniques called “memory snapshot” and “quiescing”.

A memory snapshot means that the content of the VM’s RAM is captured in sync with capturing a snapshot of the VM’s disks. The result is that a VM can be restored to the exact running state in which it has been at the time of the snapshot, as opposed to restored to a state where it needs to “boot” from disk. The downside is that, unlike a disk snapshot which is a quick logical operation, a memory snapshot actually needs to copy the memory from RAM to disk. To ensure consistency the machine is “paused” while the memory is copied, which results in the machine becoming “stunned” for the time it takes to write its RAM to disk. This period of downtime, which can easily last around a minute, is unacceptable for many uses and can result in lost connections and other timeouts.

Quiescing is a process in which a software agent running inside the VM can influence the applications and the OS of the machine to flush their buffers and bring their files and data to a consistent state on disk. A higher layer can then coordinate a snapshot to capture the data once all parties in the machine have synced. VMware provides a quiescing agent as part of their VMware Tools exactly for that and can make quiesced backups.

You may have noticed that all the available methods to make a snapshot with better consistency rely on being aware of the VM and its internals. This brings me to the observation that a ZFS snapshot at the storage server layer can only create crash-consistent snapshots. So, what should we do if we want to create snapshots with better consistency? We need to make them at the hypervisor layer. This however leaves our snapshot incompatible with the ZFS replication capabilities. Or does it?

FreeNAS VMware-snapshot support

FreeNAS offers a feature called VMware-snapshot. There is not much information about it, especially in my picky opinion. Here is what it says in the documentation.

VMware-Snapshot allows you to coordinate ZFS snapshots when using VMware as a datastore. Once this type of snapshot is created, FreeNAS® will automatically snapshot any running VMware virtual machines before taking a scheduled or manual ZFS snapshot of the dataset or zvol backing that VMware datastore. The temporary VMware snapshots are then deleted on the VMware side but still exist in the ZFS snapshot and can be used as stable resurrection points in that snapshot. These coordinated snapshots will be listed in Snapshots.

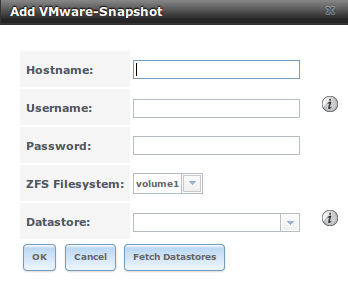

The UI includes a list of entries. Each entry includes the following fields:

FreeNAS VMware-Snapshot

Let’s analyze this. We can see that we need to give FreeNAS access to our VMware system (ESXi or vCenter both ok) and to map a ZFS volume to a VMware datastore. Before taking a ZFS snapshot of a volume, FreeNAS will “coordinate” with VMware to automatically snapshot any running VMs (that belong to the matching datastore?). FreeNAS will then execute a ZFS snapshot and then “coordinate” with VMware to delete any temporary VM snapshots. We basically get a two stage process involving “double” the snapshots than without using this integration. Why is this worth doing? Assuming that FreeNAS instructs VMware to create a “safe” snapshot (and we will see that it is indeed the case) we end up getting a consistent snapshot at the storage layer, something we couldn’t get directly in one stage.

For the purpose of recovery, the ZFS snapshot, if restored (or cloned) and mounted in VMware, will contain VMs, where each VM has its “latest” state at the time of taking the ZFS crash-consistent snapshot and also a VM consistent snapshot that you could revert to. The VM snapshot is true to the point where a VM snapshot was “coordinated”, one step before the snapshot of the whole volume.

Sounds wonderful, though some questions remain unanswered in the documentation. I configured my FreeNAS to make periodic snapshots and defined the required parameters for a WMware-snapshot integration. It is recommended that you create a separate VMware user with restricted credentials for the integration. Unfortunately when FreeNAS went to make a snapshot at the designated time, this process failed. FreeNAS sent me an “The following VM failed to snapshot” email mentioning a specific VM. That VMs was using pass-through PCI devices and as such can’t be VM snapshotted when it is running (by-design of VMware). I guess VMware has difficulties to “pause” a VM if it has physical devices attached to it, or maybe it is an issue with keeping the state of the device and the VM in-sync. Regardless, we can’t accept this. Let’s review the code to see how to we can work around that. For me, an acceptable solution would be to make a crash-consistent snapshot for the problematic VM and a safe snapshot for all other VMs. Fortunately, FreeNAS is an open source project and we can see how they implement the integration. Let’s do a source code review!

Source Code Review

Update: The code analyzed below and the patch that is described is relevant for FreeNAS 9.3. Since FreeNAS 9.10 was released in April 2016, the patch I have submitted and described below was integrated into the main code base. Please take that in context when reading the details from this point on.

FreeNAS source code is available at GitHub and most modules are written in Python, which is convenient. Snapshot creation is addressed by two flows, automatic (recurrent) snapshots and manual snapshots. Each flow has similar but separate code-base in the code. I will discuss the automatic snapshot flow, as this is what I was interested in fixing, which is mostly in a file called gui\tools\autosnap.py. The manual snapshot is triggered by a user operation in the web-gui and is implemented in a series of files (gui\storage\forms.py, gui\storage\forms.py, gui\middleware\notifier.py, etc).

For the purpose of consistency, let’s discuss the autosnap.py version that was committed on Feb 2, 2016.

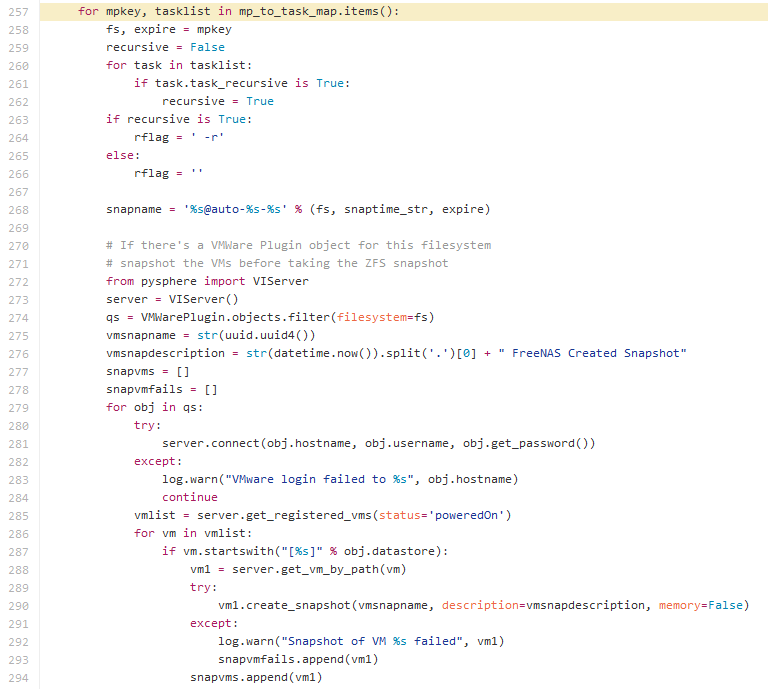

Line 257 loops on all the snapshot tasks that the system should execute. “fs” will contain the name of the ZFS volume to snapshot.

Lines 272-273 import pysphere and instantiate a VIServer object. pysphere is a Python library that provides a convenient Python interface to VMware servers. The library was recently moved from Google Code to GitHub. The original “Getting started guide” can still be accessed through a direct link at googlecode.com or in a separate wiki branch at GitHub.

Line 274 queries the mappings that we have previously created in the web-ui between ZFS volumes and VMware datasets and returns all VMware-integration entries that are mapped to the current ZFS volume (fs). We loop over the entries in line 279.

In line 281 we connect to the VMware server and in 285 request a list of all the running VMs. For each running VM (286) we check that its name starts with the datastore identifier. This indicates that the main VM config files are located on the specified datastore.

For each VM that fits the criteria, a snapshot is created in line 290 named with a random, per volume, uuid that the systems keeps track of. The “create_snapshot” method has two optional parameters, “quiesce” and “memory” both “true” by default. We can see that FreeNAS is implemented to make a non-memory, quiesed snapshot.

VMs that fail the snapshot creation process end up in the smapvmfails list while regardless of outcome, VMs end up in the snapvms list.

Next (not shown), FreeNAS will notify us by email and log if there are any failed snapshots.

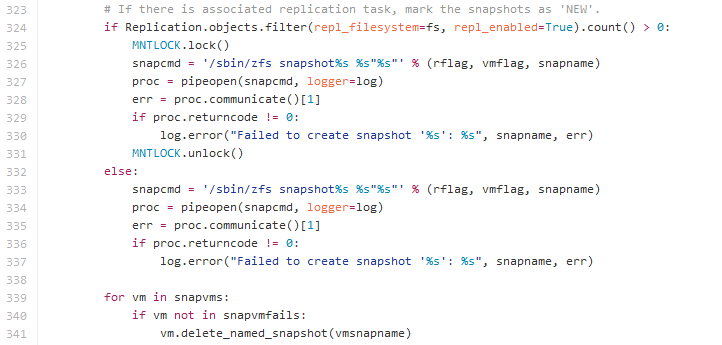

At this point, any VMs related to the ZFS volume being processed are expected to have been quiesed and snapshoted at the virtualization layer. FreeNAS can now move on to creating a ZFS snapshot.

Lines 326-328 execute “zfs snapshot” in order to create the snapshot at the storage layer. Then afterwards, in lines 339-341, FreeNAS iterates over the affected VMs and deletes the temporary snapshots using the previously generated random uuid as the key.

Implementation Issues with the Integration

VMs that can’t be snapshotted

There is a class of VM configurations that VMware is not able to snapshot. Those include powered-on VMs with pass-through PCI devices, as in my case, but also other scenarios. In these cases we can do one of two. Either skip the two stage process and make a ZFS crash-consistent snapshot or power-down the VM and then ZFS snapshot it in a proper manner. There is no universally correct strategy. Your choice should depend on the up-time requirement of the VM, data criticality of the VM, an estimate of how likely the data is to become corrupted in the event of crash recovery and so on.

I have chosen to implement the simpler, riskier process for my problematic VM. It might make sense to implement several strategies and allow for the user to specify a custom strategy for some of the VMs. For example, such exception could be specified in additional GUI fields of the existing ZFS-VMware mapping entries.

VMs that store disks in secondary datastores

While a VM will typically have one virtual disk, it can have zero or several. Besides virtual disk (vmdk) files, a VM is also defined by other files such as configuration files, logs, bios memory, etc. The location of the main config file (vmx) defines the”Virtual Machine Working Location”, which also functions as an identifier for the VM. The working location is written as “[datastoreName] VMname”.

When doing a FreeNAS-VMware integrated snapshot, we should VM snapshot all VMs that have virtual disks on affected datasets, however, line 287 only checks whether the configuration file is on an affected dataset. As a result, VMs that have virtual disks on a dataset that is different from the dataset of the working location will not be snapshotted correctly and safely.

Improving the Algorithm

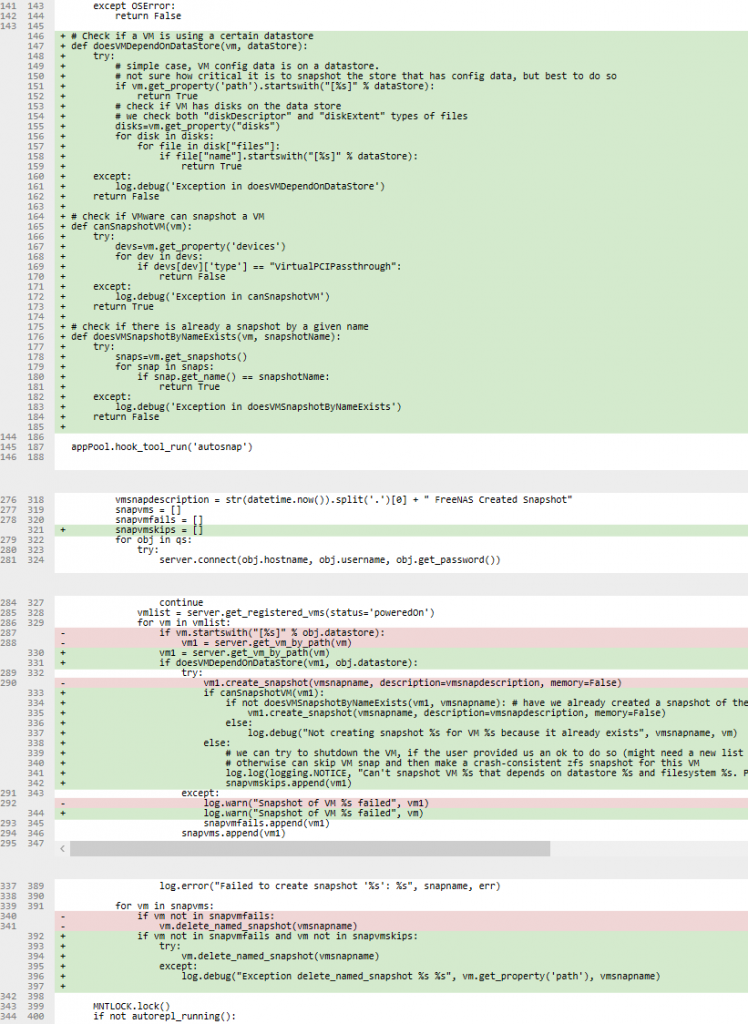

In order to address the issues mentioned above, I have implemented changes to the code that improve the algorithm and make it more sensitive to the various edge cases.

The improvements include

- Skipping VM snapshot for VMs that have PCI pass-through devices.

- Including VMs that have a virtual disk on a relevant dataset but their working location is on a different dataset.

- Preventing an error for VMs that have two datasets that are mapped to the same ZFS volume.

You can see the commit at GitHub and download it as a patch to your system.

Applying the Patch

Update: The patch that is described is relevant for FreeNAS 9.3. Since FreeNAS 9.10 was released in April 2016, the patch I have submitted and described below was integrated into the main code base. No change is needed to enjoy the benefits in recent versions of FreeNAS.

Until the changes are integrated into the main project, it might be beneficial for you to install this as a patch on your system. The downside of doing that is that at any time an update may come in that will invalidate this patch and create an invalid result with potentially harmful effects. I suggest you only follow this route if you are sure that you understand what you are doing.

Here is the process that I am using to test this change on my system

- Monitor autosnap.py for changes (more on this below)

- If and when the script is changed, review the changes and see if the patch is still needed and if it is still valid. Correct the patch accordingly.

- Apply the patch and overwrite the stock autosnap.py with the improved version.

- Update monitoring.

Monitoring the file is important because any update can come and reset the script to its original algorithm. That would cause the daily snapshot process to fail and we would like to avoid that.

I have written a small shell script to notify me via an email when a file content changes compared to its expected hash.

#!/bin/sh

# check a hash of file and email if not as expected

# parameters

email=you@localhost

machine=FreeNAS

filemonitor_emailfile=/tmp/filemonitor_emailfile

# check usage

usage()

{

echo 'Usage: sh FileMonitor.sh <file> <md5>'

}

if [ $# -ne 2 ]

then

usage

exit 1

fi

md5 -c $2 $1

if [ $? -ne 0 ]

then

# emit email header

(

echo "To: ${email}"

echo "Subject: FileMonitor alert for ${machine}"

echo " "

echo "File $1 changed and is not as expected $2"

) > ${filemonitor_emailfile}

md5 $1 >> ${filemonitor_emailfile}

# send the summary

sendmail -t < ${filemonitor_emailfile}

fi

# clean

rm ${filemonitor_emailfile}

exit 0

What is left for you is to upload this script to your FreeNAS. You can put it in one of your volumes, calculate md5 hash of the desired autosnap.py file content and then set up cron and init scripts to periodically check for the validity of the file.

Conclusions

FreeNAS goes a long way to provide consistent snapshots for VMware virtual machines. Typical scenarios are supported correctly right out of the box, however, some edge cases may generate errors or create snapshots that are less consistent than expected. Fortunately, this can be improved as was shown and I hope that these changes will be integrated into the main product for all to enjoy.

How do you check if the quiesce request took place?

Does it work with iSCSI for both file and dev extents?

I’ve had theoretical success with iscsi file extent only, but I don’t know yet how to check if it really worked ( if the quiesce request took place )

Hi msilveira,

Try to look in the log of the vm, located on the ESXi server in the VM’s folder, for any messages containing “snapshot” or “quiesce”. If that doesn’t help, consider enabling the logs of VMware Tools themselves: https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1007873

I have used this with device extents (zvols). I have not tried with file extents. However, Quiescence is a property of a snapshot and shouldn’t be affected how the storage is constructed.

Regards, Arik

Very very beautiful.

Maybe a similar strategy can be implemented for a kvm based hypervisor.

This library expose access to kvm guest agent: https://github.com/xolox/python-negotiator

Kvm guest agent operate inside the guest operating system quiescing the I/O.

KVM hypervisor snapshot can be invoked with libvirt python too.

https://libvirt.org/python.html

Hi Lucio,

Nice to see similar APIs are available for other hyper-visors!

I see no reason why can’t be done for KVM too. I guess it is just a prioritization thing for the FreeNAS developers/community. If you are a KVM user and developer, you can make a POC for such support by extending existing FreeNAS snapshot scripts. That would be only moderately difficult if you are not going to modify the GUI but will piggyback on the existing “esxi” UI.

Regards,

Arik.

This post is 4 years old, any chance this can be reviewed/renewed against current VmWare/FreeNAS/TrueNAS versions?

Hi Eduard,

True but this setup still works wonderfully for me. I have no plans to upgrade at this time.

If somebody if doing a new build and going to revisit this I am happy to link to an updated review/guide.

this also works on newer versions of FreeNAS with NFSv3. I am doing testing on NFSv4 and it is not looking so good.

Hey SmokinJoe,

Can you elaborate? What didn’t work?

Why NFS? This article doesn’t mention NFS at all.